Linear Algebra

Linear algebra provides the mathematical framework for representing signals as vectors, understanding transformations, and analyzing multi-dimensional systems. These concepts are essential for understanding the Fourier transform, filter design, and state-space analysis.

Vectors

Definition

A vector

In signal processing, a discrete signal

Properties

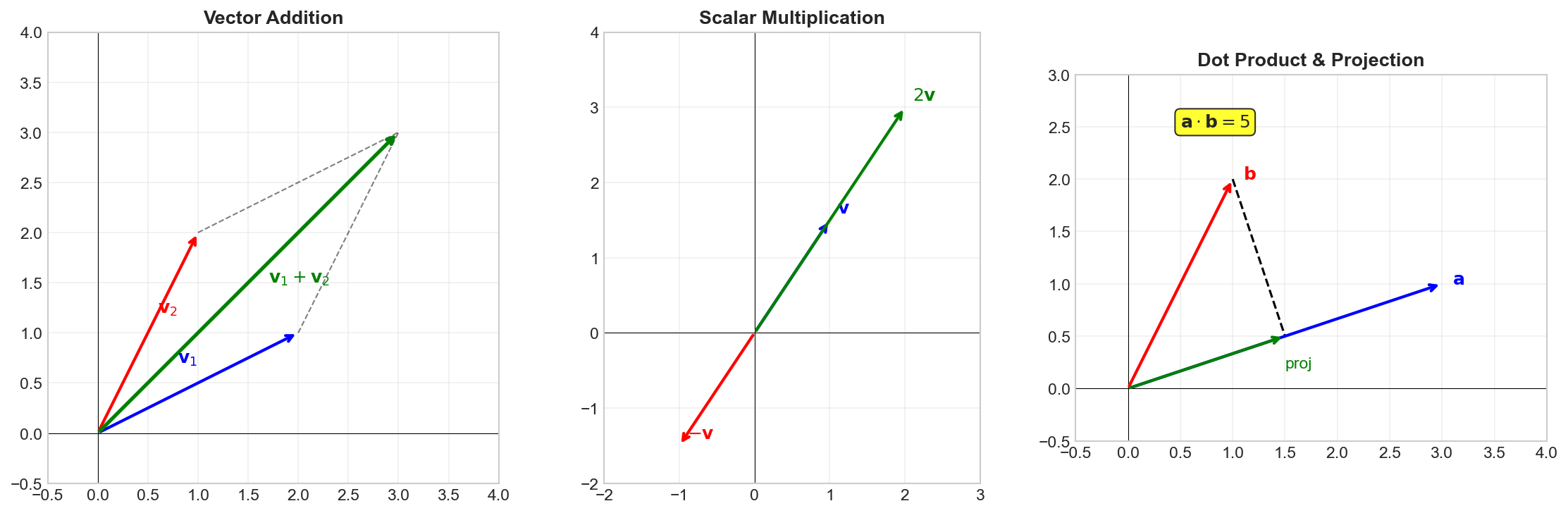

Vector Addition:

Scalar Multiplication:

Linear Combination: A vector

Linear Independence: Vectors

is

Python Implementation

import numpy as np

# Define vectors

v1 = np.array([1, 2, 3])

v2 = np.array([4, 5, 6])

# Vector operations

print(f"v1 = {v1}")

print(f"v2 = {v2}")

print(f"v1 + v2 = {v1 + v2}")

print(f"2 * v1 = {2 * v1}")

# Linear combination

c1, c2 = 0.5, 0.3

w = c1 * v1 + c2 * v2

print(f"\n{c1}*v1 + {c2}*v2 = {w}")

# Check linear independence using rank

V = np.column_stack([v1, v2])

rank = np.linalg.matrix_rank(V)

print(f"\nMatrix rank: {rank}")

print(f"Vectors are {'linearly independent' if rank == 2 else 'linearly dependent'}")Inner Products and Norms

Definition

The inner product (dot product) of two real vectors

For complex vectors

where

The norm (length) of a vector is:

Properties

Cauchy-Schwarz Inequality:

Orthogonality: Two vectors are orthogonal if

Angle Between Vectors:

Parseval's Theorem: For an orthonormal basis

Python Implementation

import numpy as np

# Real vectors

u = np.array([1, 2, 3])

v = np.array([4, 5, 6])

# Inner product and norm

inner_product = np.dot(u, v)

norm_u = np.linalg.norm(u)

norm_v = np.linalg.norm(v)

print(f"u = {u}")

print(f"v = {v}")

print(f"<u, v> = {inner_product}")

print(f"||u|| = {norm_u:.4f}")

print(f"||v|| = {norm_v:.4f}")

# Angle between vectors

cos_theta = inner_product / (norm_u * norm_v)

theta = np.arccos(cos_theta)

print(f"\nAngle between u and v: {np.degrees(theta):.2f}°")

# Complex vectors

u_complex = np.array([1 + 1j, 2 - 1j])

v_complex = np.array([1 - 1j, 1 + 1j])

# Hermitian inner product: u^H * v

inner_complex = np.vdot(u_complex, v_complex)

print(f"\nComplex inner product: {inner_complex}")

# Orthogonal vectors example

e1 = np.array([1, 0, 0])

e2 = np.array([0, 1, 0])

print(f"\n<e1, e2> = {np.dot(e1, e2)} (orthogonal)")Matrices

Definition

A matrix

Properties

Matrix-Vector Multiplication: For

Matrix-Matrix Multiplication: For

Transpose:

Important Identities:

Python Implementation

import numpy as np

# Define a matrix

A = np.array([[1, 2, 3],

[4, 5, 6]])

# Matrix properties

print(f"A =\n{A}")

print(f"Shape: {A.shape}")

print(f"Transpose:\n{A.T}")

# Matrix-vector multiplication

x = np.array([1, 0, -1])

y = A @ x # or np.dot(A, x)

print(f"\nx = {x}")

print(f"A @ x = {y}")

# Matrix-matrix multiplication

B = np.array([[1, 2],

[3, 4],

[5, 6]])

C = A @ B

print(f"\nB =\n{B}")

print(f"A @ B =\n{C}")

# Verify (AB)^T = B^T A^T

print(f"\n(AB)^T =\n{C.T}")

print(f"B^T A^T =\n{B.T @ A.T}")Special Matrices

Definition

Several special matrix types appear frequently in signal processing:

Identity Matrix:

Diagonal Matrix:

Symmetric Matrix:

Hermitian Matrix:

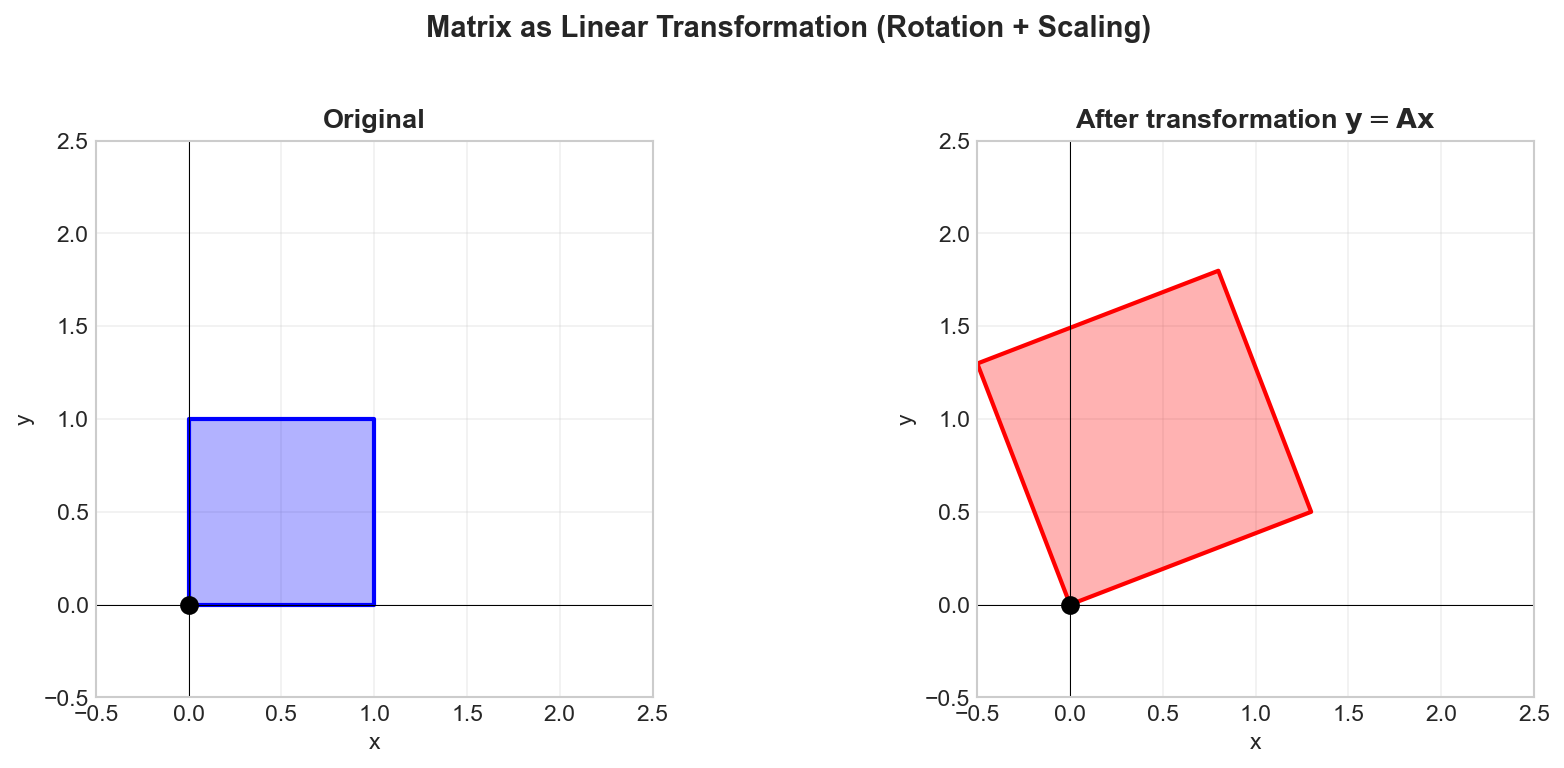

Orthogonal Matrix:

Unitary Matrix:

Properties

Orthogonal/Unitary matrices preserve norms:

Toeplitz Matrix: Constant diagonals (appears in convolution):

Circulant Matrix: Toeplitz with circular structure (appears in DFT):

Python Implementation

import numpy as np

from scipy.linalg import toeplitz, circulant

# Identity matrix

I = np.eye(3)

print(f"Identity matrix:\n{I}")

# Diagonal matrix

D = np.diag([1, 2, 3])

print(f"\nDiagonal matrix:\n{D}")

# Symmetric matrix

A = np.array([[1, 2, 3],

[2, 4, 5],

[3, 5, 6]])

print(f"\nSymmetric matrix:\n{A}")

print(f"A == A^T: {np.allclose(A, A.T)}")

# Orthogonal matrix (rotation)

theta = np.pi / 4

Q = np.array([[np.cos(theta), -np.sin(theta)],

[np.sin(theta), np.cos(theta)]])

print(f"\nOrthogonal matrix (rotation by 45°):\n{Q}")

print(f"Q^T @ Q =\n{Q.T @ Q}")

# Verify norm preservation

x = np.array([1, 2])

print(f"\n||x|| = {np.linalg.norm(x):.4f}")

print(f"||Qx|| = {np.linalg.norm(Q @ x):.4f}")

# Toeplitz matrix (convolution structure)

c = [1, 2, 3] # first column

r = [1, 4, 5] # first row

T = toeplitz(c, r)

print(f"\nToeplitz matrix:\n{T}")

# Circulant matrix (DFT structure)

c = [1, 2, 3, 4]

C = circulant(c)

print(f"\nCirculant matrix:\n{C}")Eigenvalues and Eigenvectors

Definition

For a square matrix

Eigenvalues are found by solving the characteristic equation:

Properties

Eigendecomposition: If

where

Spectral Properties:

- Eigenvalues of

are

Symmetric Matrices:

- All eigenvalues are real

- Eigenvectors are orthogonal

where is orthogonal

Python Implementation

import numpy as np

import matplotlib.pyplot as plt

# Define a matrix

A = np.array([[4, 2],

[1, 3]])

# Compute eigenvalues and eigenvectors

eigenvalues, eigenvectors = np.linalg.eig(A)

print(f"A =\n{A}")

print(f"\nEigenvalues: {eigenvalues}")

print(f"\nEigenvectors (columns):\n{eigenvectors}")

# Verify: A @ v = lambda * v

for i in range(len(eigenvalues)):

v = eigenvectors[:, i]

lam = eigenvalues[i]

Av = A @ v

lam_v = lam * v

print(f"\nEigenpair {i+1}:")

print(f" A @ v = {Av}")

print(f" λ * v = {lam_v}")

print(f" Match: {np.allclose(Av, lam_v)}")

# Eigendecomposition

V = eigenvectors

Lambda = np.diag(eigenvalues)

A_reconstructed = V @ Lambda @ np.linalg.inv(V)

print(f"\nV @ Λ @ V⁻¹ =\n{A_reconstructed.real}")

# Symmetric matrix example

S = np.array([[3, 1],

[1, 3]])

eig_vals, eig_vecs = np.linalg.eigh(S) # use eigh for symmetric matrices

print(f"\nSymmetric matrix S =\n{S}")

print(f"Eigenvalues: {eig_vals}")

print(f"Eigenvectors are orthogonal: {np.allclose(eig_vecs.T @ eig_vecs, np.eye(2))}")Matrix Inverse and Determinant

Definition

The inverse of a square matrix

A matrix is invertible (non-singular) if and only if

The determinant for a

Properties

Inverse of Products:

Determinant of Products:

Linear Systems: To solve

(In practice, use LU decomposition or other numerical methods)

Python Implementation

import numpy as np

# Define a matrix

A = np.array([[4, 7],

[2, 6]])

# Determinant

det_A = np.linalg.det(A)

print(f"A =\n{A}")

print(f"det(A) = {det_A:.4f}")

# Inverse

A_inv = np.linalg.inv(A)

print(f"\nA⁻¹ =\n{A_inv}")

# Verify: A @ A^(-1) = I

print(f"\nA @ A⁻¹ =\n{A @ A_inv}")

# Solve linear system Ax = b

b = np.array([1, 2])

x = np.linalg.solve(A, b) # more numerically stable than inv(A) @ b

print(f"\nSolving Ax = b where b = {b}")

print(f"x = {x}")

print(f"Verification: A @ x = {A @ x}")

# Singular matrix (det = 0)

B = np.array([[1, 2],

[2, 4]])

det_B = np.linalg.det(B)

print(f"\nSingular matrix B =\n{B}")

print(f"det(B) = {det_B:.6f}")Orthogonality and Projections

Definition

A set of vectors

The orthogonal projection of

Properties

Gram-Schmidt Process: Converts a set of linearly independent vectors into an orthonormal set.

Orthogonal Complement: For a subspace

Projection Matrix: The projection onto the column space of

Python Implementation

import numpy as np

def gram_schmidt(V):

"""Gram-Schmidt orthonormalization."""

n = V.shape[1]

Q = np.zeros_like(V, dtype=float)

for i in range(n):

q = V[:, i].astype(float)

for j in range(i):

q = q - np.dot(Q[:, j], V[:, i]) * Q[:, j]

Q[:, i] = q / np.linalg.norm(q)

return Q

# Example vectors

v1 = np.array([1, 1, 0])

v2 = np.array([1, 0, 1])

v3 = np.array([0, 1, 1])

V = np.column_stack([v1, v2, v3])

print(f"Original vectors (columns):\n{V}")

# Orthonormalize

Q = gram_schmidt(V)

print(f"\nOrthonormalized vectors:\n{Q}")

# Verify orthonormality

print(f"\nQ^T @ Q (should be identity):\n{np.round(Q.T @ Q, 10)}")

# Projection onto a vector

u = np.array([1, 0, 0])

v = np.array([3, 4, 0])

proj = (np.dot(v, u) / np.dot(u, u)) * u

print(f"\nProjection of v={v} onto u={u}: {proj}")

# Projection matrix onto column space of A

A = np.array([[1, 0],

[0, 1],

[1, 1]])

P = A @ np.linalg.inv(A.T @ A) @ A.T

print(f"\nProjection matrix P:\n{P}")

# Project a vector

y = np.array([1, 2, 3])

y_proj = P @ y

print(f"\nProjection of y={y} onto col(A): {y_proj}")The DFT Matrix

Definition

The Discrete Fourier Transform can be expressed as a matrix-vector multiplication. The DFT matrix

where

The DFT of a signal

Properties

Unitary Property: The normalized DFT matrix

Inverse DFT:

Circulant Matrix Diagonalization: The DFT diagonalizes circulant matrices:

Python Implementation

import numpy as np

def dft_matrix(N):

"""Construct the N-point DFT matrix."""

n = np.arange(N)

k = n.reshape((N, 1))

W = np.exp(-2j * np.pi * k * n / N)

return W

# Create 4-point DFT matrix

N = 4

F = dft_matrix(N)

print(f"DFT matrix F_{N}:\n{np.round(F, 4)}")

# Verify: F^H @ F = N * I

print(f"\nF^H @ F / N (should be identity):\n{np.round(F.conj().T @ F / N, 10).real}")

# Compare with numpy FFT

x = np.array([1, 2, 3, 4])

X_matrix = F @ x

X_fft = np.fft.fft(x)

print(f"\nSignal x = {x}")

print(f"DFT via matrix: {np.round(X_matrix, 4)}")

print(f"DFT via FFT: {np.round(X_fft, 4)}")

# Inverse DFT

x_recovered = F.conj().T @ X_matrix / N

print(f"\nRecovered signal: {np.round(x_recovered.real, 4)}")

# Diagonalization of circulant matrix

from scipy.linalg import circulant

c = [1, 2, 0, 0]

C = circulant(c)

print(f"\nCirculant matrix C:\n{C}")

# Eigenvalues are DFT of first column

eigenvalues_theory = np.fft.fft(c)

eigenvalues_actual = np.linalg.eigvals(C)

print(f"\nEigenvalues from DFT: {np.round(eigenvalues_theory, 4)}")

print(f"Actual eigenvalues: {np.round(np.sort(eigenvalues_actual), 4)}")Summary

Vector Operations

| Operation | Formula |

|---|---|

| Inner product | |

| Norm | |

| Projection |

Matrix Properties

| Property | Condition |

|---|---|

| Symmetric | |

| Orthogonal | |

| Unitary | |

| Invertible |

Key Decompositions

| Decomposition | Form | Application |

|---|---|---|

| Eigendecomposition | System analysis | |

| Spectral (symmetric) | Signal energy | |

| DFT | Frequency analysis |